Apple Vision Pro and the Developing XR Landscape

If the Vision Pro delivers a realistic, immersive experience, the XR landscape could change rapidly.

By Mark Day, AlensiaXR CEO

AlensiaXR is dedicated to expanding the reach of the HoloAnatomy® learning platform, which teaches human anatomy using holograms rather than costly, limited-use cadavers. Our software anchors digital 3D artifacts to real-world environments, enabling immersive exploration that is revolutionizing medical education all over the world.

The HoloAnatomy experience was previously only available on Microsoft HoloLens. But now that Apple has announced its Vision Pro, we’d like to unpack the potential of this new device while examining the evolving domain of Extended Reality—an umbrella term that includes Augmented Reality, Virtual Reality, and Mixed Reality. There are major differences in these categories that have divergent implications for the way each headset influences the way we learn, communicate, and navigate the world.

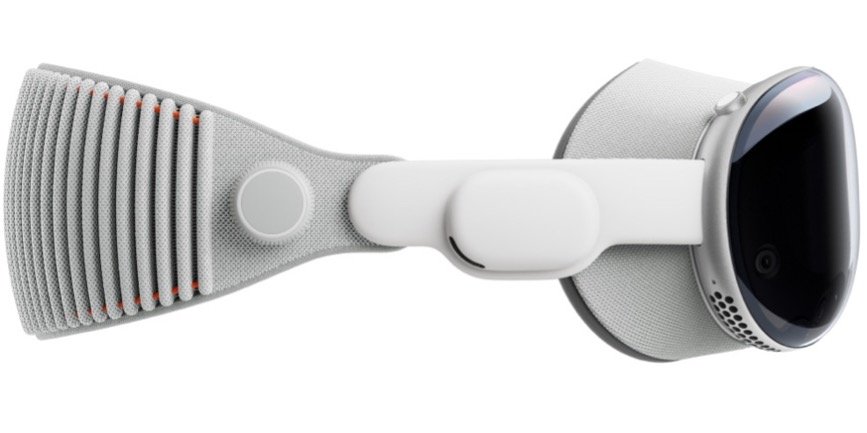

The Vision Pro isn’t available yet so we haven’t experienced the headset first-hand, but we’re excited about Apple’s entry into the XR category. Apple has a tradition of fantastic industrial design that focuses on elegance, simplicity, and a broad appeal to all ages; no one translates enterprise technology to consumers better than Apple. As our engineers prepare a HoloAnatomy version for the Vision Pro, we’re looking forward to the inevitable acceleration of XR market momentum.

Graphic via Studio X

What’s the difference between VR, AR, and MR?

Unfortunately, some tech pundits have misinformed the public about these technologies, lumping them all in the same bucket. Let’s examine the fundamental differences…

Extended Reality (XR): The broadest term possible to describe the category of “digital 3D experience.” Does not include the difference between VR, AR, and MR.

Virtual Reality (VR): A computer-generated environment that appears to be real, making the user feel as if they are fully immersed in digital surroundings, with no access or connection to the physical world (such as Meta Quest).

Pro: Cheaper than MR because it does not have to incorporate the “real world” in any form, which involves more complicated cameras and sensors.

Pro: Includes hand-held input devices that enable haptic feedback—touch sensations that make it “feel” real (e.g., phone vibration).

Con: Completely isolated from the actual world, which can lead to disorientation, as well as physiological discomforts such as eye strain and nausea.

Con: Inability to connect with other people in the same space who only appear as cartoonish avatars. Doesn’t facilitate vital human interaction.

Augmented Reality (AR): The layering of digital information over a real-world environment through a smartphone or glasses (such as Pokémon Go or Google Glass). AR is a “see-through” display with only a rudimentary relation to the actual world—there is no anchoring of digital artifacts to create the illusion of an interwoven reality.

Pro: It’s cheaper than VR and MR because there is little to no spatial reference to the physical world, which requires fewer cameras and tracking lasers.

Con: It can only overlay digital information, and can’t create an integrated experience between the actual and virtual that feels real and cohesive.

Mixed Reality (MR): A seamless interwoven experience of 3D artifacts anchored in the world, so holograms feel like they’re actually “in the room” (like Microsoft HoloLens).

Pro: Holographic anchoring creates the appearance of virtual elements being tied to the actual world. MR headsets use LiDAR (Light Detection And Ranging) to map a space in order to render holograms that appear to be as real as a table or chair.

Pro: You are still in the actual world, significantly reducing common VR problems like eye strain, nausea, and a feeling of discombobulation. As a result, you can still make eye contact, enabling deeper connection and a more collaborative shared experience.

Con: More technically complex to create great software experiences through sophisticated display systems that more precisely integrate real world and digital artifacts, which leads to greater cost.

What’s the difference between Apple and Microsoft?

The HoloAnatomy learning platform was initially designed for use with Microsoft HoloLens, which is two full generations ahead of Apple’s arrival in the XR space. Unlike HoloLens and its clear visor, the Apple Vision Pro has an opaque, “pass-through display” that uses outer-facing cameras to make it look like you’re seeing the real world—when you’re actually watching a movie of the world projected inside the headset. It’s too early to know if this video translation will result in neurological and physiological challenges, with the brain struggling to resolve the slight discrepancies between the information the retina is receiving versus your felt experience of the real world, also known as “latency.”

Apple Vision Pro

To reiterate, Mixed Reality blends real-world environments with digital content, both co-existing and interacting in real time. And it is this unique ability that makes MR ideal for immersive, interactive experiences like learning human anatomy—HoloAnatomy software is designed to work with any MR headset.

Creating high-quality immersive experiences in Mixed Reality to date has involved complicated display subsystems, high precision holographic anchoring techniques, and a stable means of creating scalable shared experiences—all of which can be quite expensive, so it’s no wonder the Vision Pro is priced where it is. The big question for Apple going forward is how much more efficiently they can optimize these systems using a pass-through display (fooling the brain with a movie of the real world vs. the real thing). If they’re successful, over time they should be able to drive down display costs in particular, especially if manufacturing failure rates are low.

The future of mixed reality will be Illuminating…

So far, the pundits seem to like the Vision Pro, with TechCrunch calling it “The platonic ideal of an XR headset.” They love the display quality and easy setup, as well as the fact that it lets you “air tap” in your lap—though that’s still well behind the HoloLens, which looks at 25 points of articulation for each hand, so you have full gestural control in the way you interact with digital artifacts.

The collaborative workspace has great promise, but is it better than Spatial, Unity, or even Teams? And bringing 2D artifacts into a collaborative workspace is table stakes, at this point.

We don’t yet know how the experience of it will play out. If the illusion is sufficient, it could be an amazing experience (no headache/vomit), but looking someone “in the eye,” when the eye is projected on a device, seems inescapably creepy—uncanny valley or not.

At this point we’re all just guessing until we can take it for a spin. We’ll be first in line.

AlensiaXR will worry about supply/availability next year (like everyone), but we’ll be ready with a Vision Pro version of HoloAnatomy software, because we believe in Apple’s long term strategic investment in the category.

The HoloAnatomy platform uses mixed-reality technology to illuminate the human body in 3D.

Microsoft was the pioneer in this space, but Apple will drive category adoption, especially if it can empower its sprawling developer ecosystem to unleash compelling content.

HoloLens is still the high bar from a B2B perspective, but if the Apple Vision Pro delivers a realistic, immersive experience, the XR landscape could change rapidly.

We are opinionated about the quality of XR devices because it influences the quality of the pedagogical experiences we offer—learning is improved with collaborative tools. The category is still nascent, but along the way we’ll leverage the devices that provide the best possible shared experience. We chose HoloLens because it was first with the hardware. Will Apple take the lead? We’ll find out and continue to illuminate the way.

AlensiaXR: Immersive collaborative experiences that improve learning outcomes.